My good friend Bill Wilder pinged me a while back and asked what date/time stamp is placed on the files in an Azure File share, and where does it come from? I thought that was a good question, and figured the only way to find out is to do a bit of trial & error. So this blog entry is for Bill, who can read it as soon as he digs out from under the 7 feet feet of snow he’s gotten in the past month.

You can use Azure File Services (uses SMB) and map it as a network drive to H: example: net use H: storage-account-name.file.core.windows.net Have a read up of Get started with Azure File storage on Windows. But this is for file storage only, if you are trying to create a web site, then just create a simple 'Web App'. Scalability targets for blobs, queues, tables, and files. Number of storage accounts per subscription: 200. This includes both Standard and Premium storage accounts. If you require more than 200 storage accounts, make a request through Azure Support. The Azure Storage team will review your business case and may approve up to 250 storage accounts.

There are multiple cases I want to look at.

- Use PowerShell to transfer a file.

- Log into a VM, attach the file share, and transfer the files between the VM and the desktop using the Windows Clipboard.

- Write an application that uses the storage client library to transfer files from the desktop to the file share.

- Use AzCopy to transfer files between the desktop and the file share.

While writing this, I found that if I copy a file from one folder to another in Windows 8, it changes the Created Date to the desktop’s current date/time, and leaves the modified date “as is”. It shows the modified date in File Explorer. this seems odd to me, but I guess if you think of Created Date as the date the file was first put in the current location, it could make sense. What’s weird about this is that it is really easy to have a file with a Created Date that comes long after the Modified Date. I mention this because there is a similar behavior in some of the following cases.

For the purposes of these tests, I set the time on my desktop machine to be 5 minutes behind so I could discern between the desktop machine’s date/time and the VM’s date/time (which I am referring to as actual date/time). The tests were run on 2/9/2015 and all of the times are in Pacific Standard Time.

Note: I tried to set the time difference to an hour, but when I did that, I got 403 Forbidden errors with every call to Azure Storage, so I guess they have some kind of check on the time difference of your computer against the real date/time.

I’ve included the PowerShell commands, C# code for using the storage client library, and AzCopy commands, so you can replicate these tests if you want to and aren’t familiar with one of the methods.

Using PowerShell

Note: You may need to install the most recent version of the Azure PowerShell cmdlets from GitHub. At the time of this writing, it is 0.8.11. You can also use the Web Platform Installer, but I’m not a fan because there’s no telling what it will install along with whatever you asked for. Last time I used it to install the Azure cmdlets, it installed the newest version of the Azure Tools/SDK, which I wasn’t ready to install (2.5 has breaking changes). If you go to the link above to GitHub, you can download the Windows Standalone version (it’s an msi) and install it.

To find which version you have, you can run PowerShell and type the following:

> Get-Module Azure

I’m going to use the PowerShell ISE so I can save my commands as a script in case I want to use them later. First I’m going to set up a couple of variables for storage account name and key. This makes it easy to just modify those fields and run the script for a different storage account. Then I’m going to create the context for the storage account. This tells which storage account to use when this variable is used in subsequent commands.

$storageAcctName = “your storage account name”

$storageAcctKey = “your storage account key ending with ”

$ctx = New-AzureStorageContext $storageAcctName $storageAcctKey

Next, I’m going to create a new file share and retain a reference to it ($s). It knows which storage account to use because I’m supplying the context I just defined. I’m naming my file share “contosofileshare”.

$s = New-AzureStorageShare contosofileshare -Context $ctx

Azure Storage Explorer Search File

To check and see the files shares set up in a storage account, you can use this command:

Get-AzureStorageShare –Context $ctx

So now I have a file share. I’m going to create a VM in Azure and RDP into it. If you don’t know how to create a VM in Azure, download the Fundamentals of Azure book from Microsoft Press (it’s free!) and go to the chapter on VM’s; it has follow-along instructions that show you how to create a VM. (Disclaimer: I’m one of the authors of the book. I get no royalties, so please don’t think I’m recommending the book for financial reasons!)

After logging into the VM, I’m going to mount my file share. First I need a command prompt window. (If your VM is running Windows Server 2012, it’s like Windows 8. Click the windows button to go to the start screen, then just start typing “Command Window” to search for it, and click on it when it shows up on the right side.) I can use a NET USE command to attach the file share. The NET USE command looks like this:

NETUSE z: storageaccountname.file.core.windows.netcontosofileshare /u:storageaccountname storageaccountkey

After this is done, you can type NET USE and it will show you all of the file shares available, and what drive letter they are mapped to. I mapped mine to z:. Now I can open file explorer and go see what’s on the Z drive.

Back to PowerShell, I’m going to upload a file called Chihuly.jpg. On the desktop, if I right-click on this file and look at its properties (Details):

- Created Date: 11/18/2014 12:21:38 PM.

- Modified Date: 7/5/2007 7:52:04 AM.

- Actual time: 2/9/2015 4:01 PM.

- Desktop time: 2/9/2015 3:56 PM.

I’m going to upload the file and see what it looks like on the other side. The following command in PowerShell uploads the file.

Set-AzureStorageFileContent -Share $s -Source D:_temp_AzureFilesToUploadChihuly.jpg

Now if I go to the File Explorer on the VM and navigate to the Z: share and look at that file, the properties displayed are these:

- Created Date: 2/9/2015 4:01 PM

- Modified Date: 2/9/2015 4:01 PM

So using PowerShell to upload files to an Azure file share changes both Created Date and Modified Date to the current date/time. The date/time on the desktop machine is not used.

Where does Azure get the Created Date and Modified Date – from a time server somewhere? From the server holding the storage? That’s the question.

Now I’m going to download the file using PowerShell and see what the date/time stamps are on the downloaded file. The following command in PowerShell downloads the file.

Get-AzureStorageFileContent -Share $s -Path Chihuly.jpg -Destination d:_temp_AzureFilesDownloaded

Desktop time: 6:15 PM

Actual time: 6:20 PM

After the download:

Created Date: 2/9/2015 6:15 PM

Modified Date: 2/9/2015 6:15 PM

From this, we can see that using PowerShell to download files from an Azure file share changes the Modified Date to the date/time on the local machine. In this case, it sets the Created Date to the time on the local machine, but in some test cases, it set it to a few minutes before that. Where does it get the value for created date?

Copy and Paste

Now I’m going to try the same thing with copy (desktop) and paste (vm). I copy the file on the desktop into my Windows Clipboard (Ctrl-C), then click on the VM and go to the share and paste the file (Ctrl-V). This is interesting.

Desktop time: 3:59 PM

Actual time: 4:04 PM

Desktop Created Date: 11/18/2014 12:21 PM PST

Desktop Modified Date: 7/5/2007 7:52 AM

Copy/Paste results:

File Share Created Date: 2/9/2015 4:04 PM

File Share Modified Date: 7/5/2007 7:52 AM

From this, I’m going to surmise that using copy and paste to upload files to an Azure file share changes the created date to the current date/time, but leaves the modified date as the original value. This is the same behavior as Windows.

Now I’m going to try copying the file from the Azure file share (via the VM) to the local desktop and check the dates.

Desktop time: 6:13 PM

Actual time: 6:18 PM

Created date: 6:13 PM

Modified date: 7/5/2007 7:52 PM

Using copy and paste to download files from an Azure file share changes the created date to the current date/time on the desktop, but leaves the modified date unchanged – it is the same value as that the file on the file share. This is the same behavior as Windows.

Storage Client Library

What if I write some code to upload and download files to/from the file share? Let’s look at what that looks like.

First, I need a method to get a reference to the directory on the file share that I’m going to use. This will be used for both the upload and the download. Comments inline tell how this works. It’s very similar to blob storage.

Next, we need code for doing the upload and download. Each of these will call the code above to get the reference to the directory on the file share.

Here is the code to upload a file. After getting the reference to the directory on the file share, this gets a reference to the file and uploads to that file. SubFolderPath is the path on the share to be used. If this is blank, the file goes in the root of the share.

And here is the code to download a file. The localFolderPath is the destination on the local machine for the Download directory where the files will be placed.

So now let’s run our tests using the code above.

Desktop time: 5:50 PM

Actual time: 5:55 PM

After uploading the file, the results are as follows:

Created Date: 2/9/2015 5:55 PM

Modified Date: 2/9/2015 5:55 PM

From this, I going to surmise that using the storage client library to upload a file, the Date Created and Date Modified are both set to the actual current date/time.

This makes sense. The PowerShell upload calls the REST API, and so does the storage client library.

When I use the storage client library to download the file to the desktop, what do I get?

Desktop time: 6:21 PM

Actual time: 6:26 PM

After downloading the file, the results are as follows:

Created Date: 2/5/2015 5:27 PM

Modified Date: 6:21 PM

It is setting the Modified Date to the date/time on the desktop machine. Where’s that created date coming from?

What about AzCopy?

This should be the same as the storage client library and PowerShell, but I’m going to try it to be thorough. Click to download AzCopy and/or learn more about it. I’m going to list the commands that I used to upload and download files. One thing to note: There is a switch you can add (/mt) that will retain the modified date/time on the blob when you download it. I’ve done this without the /mt switch because I want to see what it gets set to by default.

Desktop time: 4:07 PM

Actual time: 4:12 PM

The command looks like this. Take the space out before storageaccountname – I just put it in so it wouldn’t make it a live hyperlink.

AzCopy d:_temp_AzureFilesToUpload

https:// storageaccountname.file.core.windows.net/sharename/ Chihuly.jpg /DestKey:storageaccountkey

After uploading the file, the results are as follows:

Created Date 4:12 PM

Modified Date: 4:12 PM

When uploading a file using AzCopy, the Created Date and Modified Date are both set to the current date/time.

Desktop time: 6:20 PM

Actual time: 6:25 PM

The download command looks like this. d:_temp_AzureFilesDownloaded is the local directory I’m downloading the files to. Again, take the space out before the storage account name.

AzCopy https:// storageaccountname.file.core.windows.net/sharename/

d:_temp_AzureFilesDownloaded Chihuly.jpg /SourceKey:storageaccountkey

Created Date: 6:20 PM

Modified Date: 6:20 PM

When downloading a file using AzCopy, the Created Date and Modified Date are both set to the date/time of the local desktop. (It could be that this Created Date is sometimes earlier than Modified Date; I only ran this test a couple of times.)

RESULTS

Uploading files from the desktop to the file share:

PowerShell, Storage Client Library, AzCopy: Uploading files to an Azure file share changes the Created Date and Modified Date to the current date/time.

Copy/Paste: Uploading files changes Created Date to the current date/time, but retains the original Modified Date. This is the same behavior as Windows.

Downloading files:

PowerShell, Storage Client Library, AzCopy: Sets Created Date and Modified Date to the date/time on the desktop. In some cases, the Created Date was actually set earlier than the modified date, so I’m not sure it’s actually using the desktop date/time for that value.

Copy/Paste: Changes the Created Date to the desktop date/time, leaves the Modified Date unchanged.

How does this compare to blob storage?

The deal with the Created Date is just weird, so I wanted to compare this behavior with that of uploading and downloading files using the storage client library and AzCopy to or from blob storage.

When you upload a blob using either method, it sets the Last Modified Date to the current date/time. (There is no created date property on blobs.) This is consistent with uploading files to the file share using PowerShell, the Storage Client Library, and AzCopy.

When you download a blob from blob storage using AzCopy, it sets the Created Date and Modified Date to the current date/time.

When you download a blob from blob storage using the storage client library, I saw some very strange results:

Desktop time: 6:40 PM

Actual time: 6:45 PM

Last Modified Date on the blob is 6:44 PM

Download blob.

Created Date: 2/5/2015 5:27 PM

Modified Date: 6:22 PM

Remember that unless specified, all of the dates are 2/9/2015. I have no idea where it’s getting that value for Created Date. Note that the Created Date is the same one given to the file when downloading it from the file share using the storage client library. Coincidence? Probably not.

Overall results

In most cases, the date/time stamps assigned to the files as they are transferred between a desktop and an Azure file share make sense. The one case I have no explanation for is where the Created Date comes from when downloading a file to the desktop. While it is frequently equal to the modified date, it is not always the case. Does Azure get this value from a time server somewhere, or from a machine hosting storage in Azure, or what? I don’t know. I’ve submitted this question to the Azure MVP distribution list, and if I get an answer, I’ll update this blog entry.

In the meantime, if you decide to write your own syncing software to sync file shares or blobs, I would consider using a hash of the file or blob to discover if the files are actually different, and use the Modified Date and not the Created Date.

Tags: Azure, Azure Files, Azure Storage

-->Overview

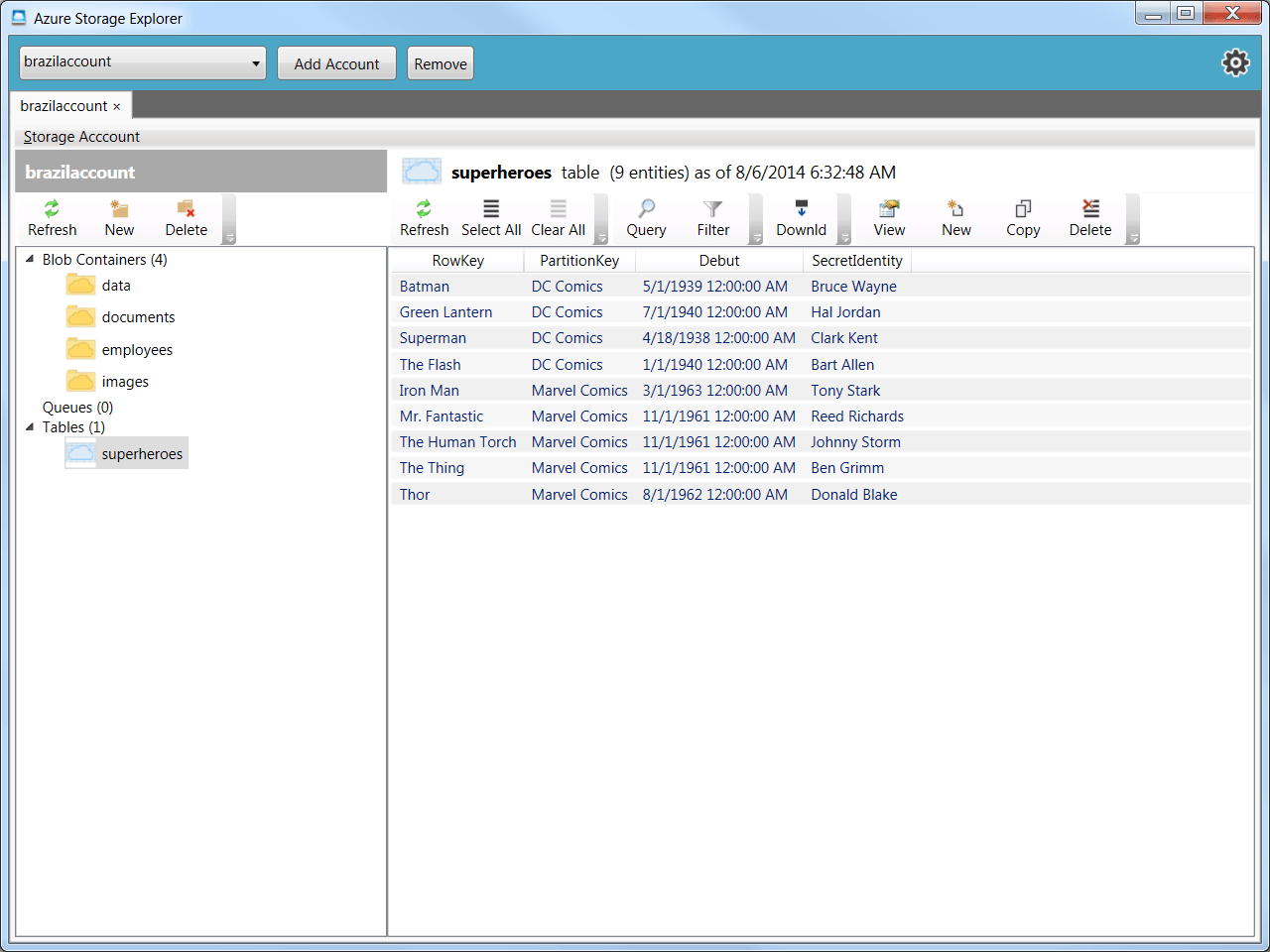

Microsoft Azure Storage Explorer is a standalone app that makes it easy to work with Azure Storage data on Windows, macOS, and Linux.

In this article, you'll learn several ways of connecting to and managing your Azure storage accounts.

Prerequisites

The following versions of Windows support Storage Explorer:

- Windows 10 (recommended)

- Windows 8

- Windows 7

For all versions of Windows, Storage Explorer requires .NET Framework 4.7.2 at a minimum.

The following versions of macOS support Storage Explorer:

- macOS 10.12 Sierra and later versions

Storage Explorer is available in the Snap Store for most common distributions of Linux. We recommend Snap Store for this installation. The Storage Explorer snap installs all of its dependencies and updates when new versions are published to the Snap Store.

For supported distributions, see the snapd installation page.

Azure Storage File Explorer Download

Storage Explorer requires the use of a password manager. You might have to connect to a password manager manually. You can connect Storage Explorer to your system's password manager by running the following command:

Storage Explorer is also available as a .tar.gz download. If you use the .tar.gz, you must install dependencies manually. The following distributions of Linux support .tar.gz installation:

- Ubuntu 20.04 x64

- Ubuntu 18.04 x64

- Ubuntu 16.04 x64

The .tar.gz installation might work on other distributions, but only these listed ones are officially supported.

For more help installing Storage Explorer on Linux, see Linux dependencies in the Azure Storage Explorer troubleshooting guide.

Download and install

To download and install Storage Explorer, see Azure Storage Explorer.

Connect to a storage account or service

Storage Explorer provides several ways to connect to Azure resources:

Sign in to Azure

Note

Azure Storage File Explorer

To fully access resources after you sign in, Storage Explorer requires both management (Azure Resource Manager) and data layer permissions. This means that you need Azure Active Directory (Azure AD) permissions to access your storage account, the containers in the account, and the data in the containers. If you have permissions only at the data layer, consider choosing the Sign in using Azure Active Directory (Azure AD) option when attaching to a resource. For more information about the specific permissions Storage Explorer requires, see the Azure Storage Explorer troubleshooting guide.

In Storage Explorer, select View > Account Management or select the Manage Accounts button.

ACCOUNT MANAGEMENT now displays all the Azure accounts you're signed in to. To connect to another account, select Add an account...

The Connect to Azure Storage dialog opens. In the Select Resource panel, select Subscription.

In the Select Azure Environment panel, select an Azure environment to sign in to. You can sign in to global Azure, a national cloud or an Azure Stack instance. Then select Next.

Tip

For more information about Azure Stack, see Connect Storage Explorer to an Azure Stack subscription or storage account.

Storage Explorer will open a webpage for you to sign in.

After you successfully sign in with an Azure account, the account and the Azure subscriptions associated with that account appear under ACCOUNT MANAGEMENT. Select the Azure subscriptions that you want to work with, and then select Apply.

EXPLORER displays the storage accounts associated with the selected Azure subscriptions.

Attach to an individual resource

Storage Explorer lets you connect to individual resources, such as an Azure Data Lake Storage Gen2 container, using various authentication methods. Some authentication methods are only supported for certain resource types.

| Resource type | Azure AD | Account Name and Key | Shared Access Signature (SAS) | Public (anonymous) |

|---|---|---|---|---|

| Storage accounts | Yes | Yes | Yes (connection string or URL) | No |

| Blob containers | Yes | No | Yes (URL) | Yes |

| Gen2 containers | Yes | No | Yes (URL) | Yes |

| Gen2 directories | Yes | No | Yes (URL) | Yes |

| File shares | No | No | Yes (URL) | No |

| Queues | Yes | No | Yes (URL) | No |

| Tables | No | No | Yes (URL) | No |

Storage Explorer can also connect to a local storage emulator using the emulator's configured ports.

To connect to an individual resource, select the Connect button in the left-hand toolbar. Then follow the instructions for the resource type you want to connect to.

When a connection to a storage account is successfully added, a new tree node will appear under Local & Attached > Storage Accounts.

For other resource types, a new node is added under Local & Attached > Storage Accounts > (Attached Containers). The node will appear under a group node matching its type. For example, a new connection to an Azure Data Lake Storage Gen2 container will appear under Blob Containers.

If Storage Explorer couldn't add your connection, or if you can't access your data after successfully adding the connection, see the Azure Storage Explorer troubleshooting guide.

The following sections describe the different authentication methods you can use to connect to individual resources.

Azure AD

Storage Explorer can use your Azure account to connect to the following resource types:

- Blob containers

- Azure Data Lake Storage Gen2 containers

- Azure Data Lake Storage Gen2 directories

- Queues

Azure AD is the preferred option if you have data layer access to your resource but no management layer access.

- Sign in to at least one Azure account using the steps described above.

- In the Select Resource panel of the Connect to Azure Storage dialog, select Blob container, ADLS Gen2 container, or Queue.

- Select Sign in using Azure Active Directory (Azure AD) and select Next.

- Select an Azure account and tenant. The account and tenant must have access to the Storage resource you want to attach to. Select Next.

- Enter a display name for your connection and the URL of the resource. Select Next.

- Review your connection information in the Summary panel. If the connection information is correct, select Connect.

Account name and key

Storage Explorer can connect to a storage account using the storage account's name and key.

You can find your account keys in the Azure portal. Open your storage account page and select Settings > Access keys.

- In the Select Resource panel of the Connect to Azure Storage dialog, select Storage account.

- Select Account name and key and select Next.

- Enter a display name for your connection, the name of the account, and one of the account keys. Select the appropriate Azure environment. Select Next.

- Review your connection information in the Summary panel. If the connection information is correct, select Connect.

Shared access signature (SAS) connection string

Storage Explorer can connect to a storage account using a connection string with a Shared Access Signature (SAS). A SAS connection string looks like this:

- In the Select Resource panel of the Connect to Azure Storage dialog, select Storage account.

- Select Shared access signature (SAS) and select Next.

- Enter a display name for your connection and the SAS connection string for the storage account. Select Next.

- Review your connection information in the Summary panel. If the connection information is correct, select Connect.

Shared access signature (SAS) URL

Storage Explorer can connect to the following resource types using a SAS URI:

- Blob container

- Azure Data Lake Storage Gen2 container or directory

- File share

- Queue

- Table

A SAS URI looks like this:

- In the Select Resource panel of the Connect to Azure Storage dialog, select the resource you want to connect to.

- Select Shared access signature (SAS) and select Next.

- Enter a display name for your connection and the SAS URI for the resource. Select Next.

- Review your connection information in the Summary panel. If the connection information is correct, select Connect.

Local storage emulator

Storage Explorer can connect to an Azure Storage emulator. Currently, there are two supported emulators:

- Azure Storage Emulator (Windows only)

- Azurite (Windows, macOS, or Linux)

If your emulator is listening on the default ports, you can use the Local & Attached > Storage Accounts > Emulator - Default Ports node to access your emulator. Driver for seagate freeagent goflex mac.

If you want to use a different name for your connection, or if your emulator isn't running on the default ports:

Start your emulator.

Important

Storage Explorer doesn't automatically start your emulator. You must start it manually.

In the Select Resource panel of the Connect to Azure Storage dialog, select Local storage emulator.

Enter a display name for your connection and the port number for each emulated service you want to use. If you don't want to use to a service, leave the corresponding port blank. Select Next.

Review your connection information in the Summary panel. If the connection information is correct, select Connect.

Connect to Azure Cosmos DB

Storage Explorer also supports connecting to Azure Cosmos DB resources.

Connect to an Azure Cosmos DB account by using a connection string

Instead of managing Azure Cosmos DB accounts through an Azure subscription, you can connect to Azure Cosmos DB by using a connection string. To connect, follow these steps:

Under EXPLORER, expand Local & Attached, right-click Cosmos DB Accounts, and select Connect to Azure Cosmos DB.

Select the Azure Cosmos DB API, enter your Connection String data, and then select OK to connect the Azure Cosmos DB account. For information about how to retrieve the connection string, see Manage an Azure Cosmos account.

Connect to Azure Data Lake Store by URI

You can access a resource that's not in your subscription. You need someone who has access to that resource to give you the resource URI. After you sign in, connect to Data Lake Store by using the URI. To connect, follow these steps:

Under EXPLORER, expand Local & Attached.

Right-click Data Lake Storage Gen1, and select Connect to Data Lake Storage Gen1.

Enter the URI, and then select OK. Your Data Lake Store appears under Data Lake Storage.

This example uses Data Lake Storage Gen1. Azure Data Lake Storage Gen2 is now available. For more information, see What is Azure Data Lake Storage Gen1.

Azure Storage Explorer Move File

Generate a shared access signature in Storage Explorer

Account level shared access signature

Right-click the storage account you want share, and then select Get Shared Access Signature.

In Shared Access Signature, specify the time frame and permissions you want for the account, and then select Create.

Copy either the Connection string or the raw Query string to your clipboard.

Azure Storage Account Explorer Download

Service level shared access signature

You can get a shared access signature at the service level. For more information, see Get the SAS for a blob container.

Search for storage accounts

Azure Storage Explorer Log File

To find a storage resource, you can search in the EXPLORER pane.

As you enter text in the search box, Storage Explorer displays all resources that match the search value you've entered up to that point. This example shows a search for endpoints:

Note

To speed up your search, use Account Management to deselect any subscriptions that don't contain the item you're searching for. You can also right-click a node and select Search From Here to start searching from a specific node.

Next steps